This post is the first one of a series of four describing all the different commands. We’ll discuss the commands to set up log shipping with dbatools, how to check the status, to check for errors, and to recover in case of an emergency.

What is log shipping

Before we go into the why I want you to know what log shipping is. Log shipping dates back to the early versions of SQL Server. The first article that I could find dates back to the year 2000 and explains how to setup log shipping with SQL Server version 7.

Microsoft has done a lot of work on the log shipping setup process and we no longer have to set up the “poor man’s log shipping” anymore. The setup contains a nice GUI and it makes it easier to set up log shipping for your database(s).

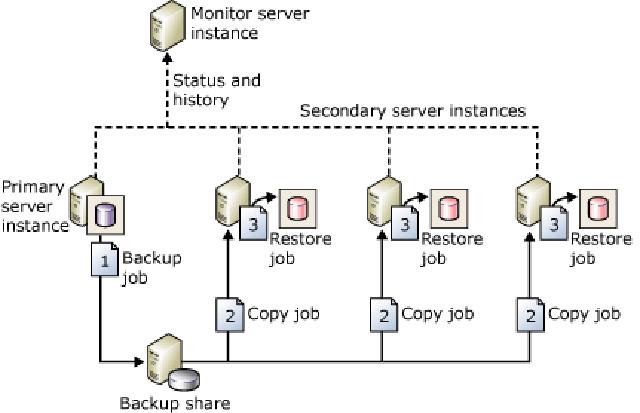

Log shipping is the process of creating a transaction log backup. Copy that backup to a secondary server and restore the log to synchronize the databases.

The image below shows the different elements of a log shipping architecture.

I’m not going to explain in detail what log shipping is or how to set it up using the traditional method. There are lots of websites that have already done a great job describing everything related to log shipping. Here are a couple of websites that could help

Why create a command set

Like I said before, Microsoft has done a great job of automating the log shipping process and making it easier for the user to set up. The GUI describes all the settings needed. Even someone inexperienced could set up log shipping in a short amount of time (given that all the requirements have been met).

Microsoft has simplified the process since the earlier versions of SQL Server, setting up log shipping still takes a lot of time. You have to set up each database individually and most of the time you are doing almost the same. There are ways to automate this using dynamic T-SQL.

However, I found that, at least in my situation, it was prone to errors and it took me more time to troubleshoot it than to set it up manually.

Why we needed to setup log shipping

A couple of months ago my company wanted to create a High Availability solution for our production databases. Researching a solution like clustering for Availability Groups, we looked at architecture decisions, licensing, and costs.

The outcome was that we weren’t able to use any SQL Server Enterprise HA solutions.

Log shipping is not a so-called hot standby that immediately recovers in case a service or database goes down. Instead, it is called a warm standby where there is human interaction needed to recover.

We have about 20 production databases and in my opinion, we are a small shop. Looking at the database size and amount of transactions. We decided to go with log shipping.

It’s well established within SQL Server and not yet deprecated like mirroring. So on a Monday morning, after all the backups were done, I started to configure our databases for log shipping. For me, the process of setting up log shipping was very tedious.

I had to go through each of the databases and use the same settings over and over. The biggest difference would be the database name in the backup or copy directory.

The reason for the command set

In the end, it took me about 3 to 4 days to set it up. This was mostly because I didn’t want to stress the network too much by setting up multiple databases at once. At some point, I made some mistakes and had to start over again. I don’t like to do repetitive tasks and in my daily work, I automate most of those processes.

To set up log shipping for a single database in the GUI you need about 40 clicks and have to insert lots of information in different windows.

That’s where I contacted Chrissy LeMaire ( b | t ) from the dbatools project and asked if it would be interesting to create log shipping commands for the PowerShell module. She was enthusiastic about it and I started to develop the commands.

The command set

Initially, it started with the command “Invoke-DbaLogShipping” but soon after a couple of commands followed. The command set contains the commands

This blog will be the first of four posts where we dive into “Invoke-DbaLogShipping” because we first need to have log shipping setup before we can use the other commands.

The Command Invoke-DbaLogShipping

During the development of the command, I chose to make the process of setting up the log shipping as easy as possible for the user.

My main requirement for the command was that as long as you have the minimal requirements for log shipping you would be able to set it up.

The main command is Invoke-DbaLogShipping. Within log shipping, there are a lot of decisions that can be made. All those decisions ended up with lots of parameters. The command has over 90 different parameters ranging from setting the backup folder to set the name of schedules. I will explain a couple of the most important ones that I think will be used the most:

General

- Force: When this parameter is used, lots of assumptions will be made and you need only a minimum of settings to set up log shipping.

Server

- SourceSqlInstance: The primary instance

- DestinationSqlInstance: The secondary instance

Database

- Database: Database (or databases) that need to be log shipped

Backup

- BackupNetworkPath: Network path where the backups will be saved. This is the parent folder, a child folder for each database will be created in this folder.

- BackupLocalPath: The local path for the backups. Only used by the primary instance and not mandatory

- BackupRetention: How long to keep the backups? The default is 3 days.

- BackupScheduleStartDate: Start date when the backup schedule needs to start

- BackupScheduleStartTime: Start time of the backup schedule. Maybe the backups only take place at a certain time of the day.

- BackupScheduleEndTime: End time of the backup schedule. Maybe the backups only take place at a certain time of the day.

- CompressBackup: Do you want the backup to be compressed? By default, it looks at the SQL Server edition and server settings.

- FullBackupPath: Path to the full backup. Is only used when log shipping is being set up.

- GenerateFullBackup: Instead of using an existing full backup you can use this parameter to create a full backup on the fly during setup.

- UseExistingFullBackup: Besides FullBackupPath and GenerateFullBackup you can also set the option to let the command retrieve the last full backup made for the database.

Copy

- CopyDestinationFolder: Where do the backups need to be copied to

Restore

- DisconnectUsers: Important setting if your secondary database is in read-only mode. Users will be disconnected if the restore process starts and this is parameter was set.

- Standby: Do you want your databases to be in standby mode? By default, the database will be in no-recovery mode.

- StandbyDirectory: Directory where the TUF (transaction undo file) file needs to go for a database. This is needed when using the -Standby parameter.

Setup log shipping using Invoke-DbaLogShipping

Before we look into the command we need to go through the prerequisites of log shipping. The items below are the bare minimum to set up log shipping.

Prerequisites

- At least SQL Server 2000 standard edition

- The primary database needs to be in Full recovery mode.

- A network share/drive that’s accessible for the primary and secondary server

A more sophisticated setup would be:

- A primary server containing the primary databases

- A secondary server containing the secondary databases

- A monitoring server logging all the information

- At least SQL Server 2000 standard edition

- The primary database needs to be in Full recovery mode.

- A separate network share for the backup process

- A separate network share for the copy process

Technically you don’t need multiple servers to set up log shipping. You can set it up with just one single SQL Server instance. In an HA solution, this wouldn’t make sense but technically it’s possible. Having a separate server acting as the monitoring server ensures that when one of the server goes down, the logging of the actions still takes place.

Having a separate network share for both the backup and copy makes it easier to set up security and decide which accounts can access the backups.

The backup share needs to be readable and writable by the primary instance and readable by the secondary instance.

The copy share needs to be accessible and writable for only the secondary instance.

Additional information

- Backup Compression

- Database Status

- Schedules

- Cleanup

Backup Compression

Backup compression was introduced in SQL Server 2008 Enterprise. Beginning in SQL Server 2008 R2, backup compression is supported by SQL Server 2008 R2 Standard and all higher editions.

Every edition of SQL Server 2008 and later can restore a compressed backup. Backup Compression in SQL Server

Database Status

You can decide whether you want the database to be in a recovery or read-only state. Read-only gives you the ability to let other processes, like SSRS or ETL, read production data without interfering with the actual production instance.

Schedules

When to run the backup of the transaction log. Does it need to run every 15 minutes, which is the default, or every hour, once a day, etc? The same goes for the copy and restore schedule.

Cleanup

How long do you need to hold on to the backups? The default is three days which in some cases can be quite long.

Setup

This is where it’s going to be fun. As an example, I’ll be using the command with the minimum of parameters. To do this a couple of the requirements need to be met. Let’s go through these prerequisites:

- Setup the shares for the backup and copy destination

- Privileges for primary and secondary instances to the backup and copy shares are set

- Database(s) that need to be log shipped

- Set the database in read-only or no-recovery mode

- Compress or not compress the backup

- Generate or use an existing full backup

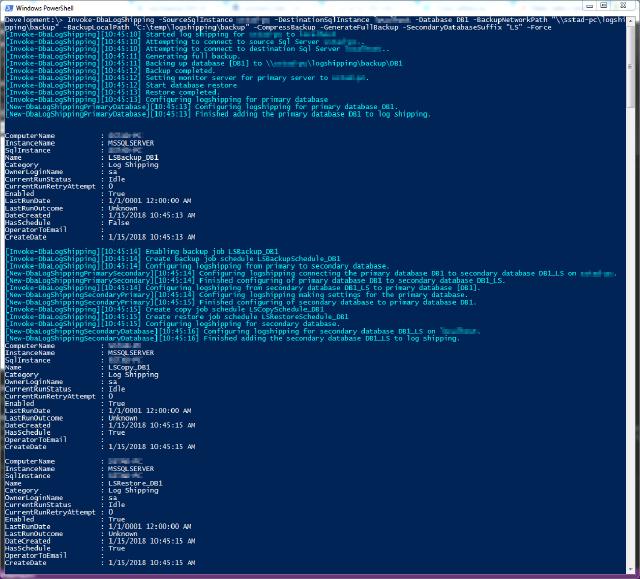

The command below will log ship the database from instance SQL1 to SQL2 by generating a full backup. The backups will be compressed and to initialize the backup a new full backup is generated. No other settings are made. To make sure all the settings are met the -Force parameter is used to enable all the assumptions.

$params = @{

SourceSqlInstance = 'SQL1'

DestinationSqlInstance = 'SQL2'

Database = @('DB1', 'DB2', 'DB3')

BackupNetworkPath = '\\SQL1\Backup'

BackupLocalPath = 'E:\logshipping\backup'

CopyDestinationFolder = '\\SQL2\Copy'

CompressBackup = $true

GenerateFullBackup = $true

Force = $true

}

Invoke-DbaLogShipping @params

The result:

This is only a fraction of the parameters available. The remaining parameters make it possible to configure your log shipping exactly the way you want it to be.

Execute the following command to get more information:

Get-Help Invoke-DbaLogShipping -Detailed

The next blog will be about the command Test-DbaLogShippingStatus .

It’s responsible for testing if all of your log-shipped databases are in a synchronized state.