For the coming T-SQL Tuesday I want to share some of my experiences with a database with data modeling gone wrong where I was the DBA.

To know where all the bad data modeling came from you have to know the history of the piece of software. About 10 years ago there was hype around saving data in XML files. XML was supposed to be a good solution to save data in a formatted way without the hassle of using databases or formatted text files.

Although this seemed a good solution the company grew fast and suddenly there were performance issues with the application because of the large number of files in a directory.

If you have more than 100.000 files in an NTFS directory things start to get slow. So the developer decided that the solution would be to save the data in a database. This way the problem with the number of dossiers was fixed and the data was securely saved for future use.

This is where the problem comes in. What the developer did was save the entire XML data file into a text data type column for all the files. At the time SQL Server already had the XML datatype but this wasn’t used because the XML was not a 100% valid XML code. This of course made things for SQL Server a bit more difficult to predict.

In other words, this went from bad to worse. Besides saving the data in a text data type, to read the data the developer didn’t use the newly developed XQuery methods but used the OPENXML method for retrieving specific data from an entire XML string.

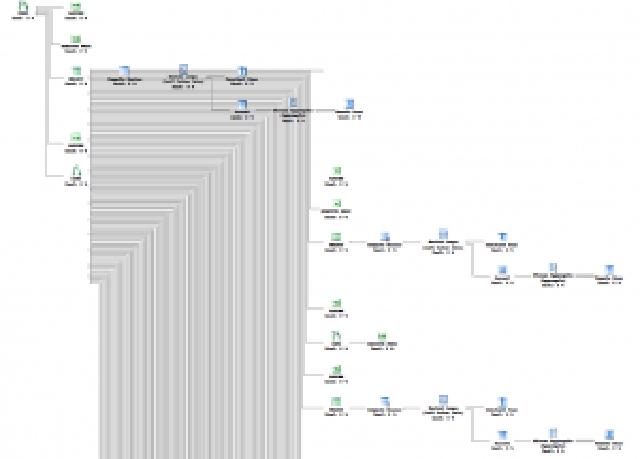

Jeff Wharton wrote a nice article why not use OPENXML. This made things worse for SQL Server because estimations couldn’t be made and SQL. The query plan for updating all the different records would look something like this:

I wasn’t able to create a print screen for the entire query plan. It was simply too big. Instead, I tried to zoom out until you could somewhat see what happened during the update of a single dossier.

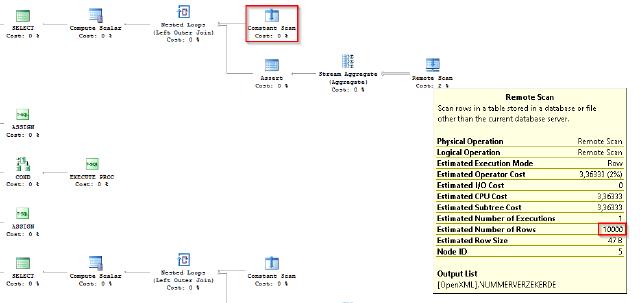

By updating the data this way SQL Server didn’t have any way to estimate the number of rows and therefore would not use its full potential. In the next image, you’ll see what happens when the OPENXML statement was called for a specific part of the query.

Keep in mind that this part would be the same for all the lines you see in the image shown earlier.

As you can see SQL Server estimates the number of rows to 10.000 by the optimizer. This, of course, isn’t right but because SQL Server acts like the selected data is from outside SQL Server it has to create some estimate to get things done.

That alone was just the start. Because this was a product from an external developer we couldn’t make any changes to the database. Unfortunately, the company wanted to use the data from the system for internal processes.

Using the XML data wasn’t an option so the developer made so-called query tables. The query tables had the same data that was present in the XML tags but then solely for that entity and not the entire dossier.

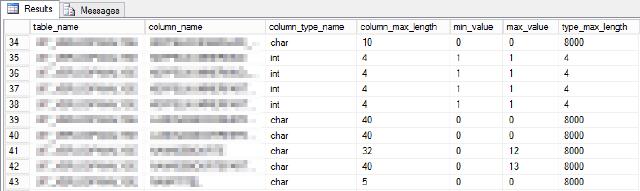

That’s nice wouldn’t it be that there were some really bad choices in the data types of a variety of tables? I will elaborate on the data types further along the story. Thus far this was not too good but I went a little further. I’ve been taught to use the data types that support the data that will be saved.

I’ve seen a lot of situations where a column was set up with a data type CHAR with a length of 80 but the longest value saved in the column was 30 characters long. This means I have to save up at least 50 bytes of data that I don’t use.

That doesn’t sound like much but multiply it by a million and this will get ugly very fast. Look at the example below:

This table has 148 columns and all the columns were set up with the CHAR datatype. I made a small estimation and summed up all the lengths of the columns and summed all the lengths of the max value present.

It turned out that the number of bytes in the columns was a total of 1602. The total of the max lengths of the columns was 582. That is a difference of 1020 bytes per row!

Multiply this by the 70 million rows present and you have a whopping 66 GB of data that’s not being used. This example was just one of the many tables present in the database.

In the end, we stopped the analysis and wrote a report to the developer. Fortunately, they’re going to fix this but there’ll be a good amount of time passing before everything is fixed. Hope you enjoyed the article for the T-SQL Tuesday and any comment is appreciated.