Problem

The business uses a database that is 350 GB database in size and has only one data file and one transaction log file. The previous DBA didn’t increase the amount of data files which lead to a large data file. Due to the size of the data file, I got into trouble with the performance, and restoring the database wasn’t a walk in the park either. So how can split a large data file into smaller data files?

What are the options?

- Do nothing and let the file grow when needed

- Split the file up into multiple data files

Solution number 1 is not a solution and will bite you in the long run because the database will grow so large it will not be easy to maintain anymore. Solution number 2 will make sure the database has multiple files which could deliver an increase in performance and will be easier to restore. This solution can have to be done in two ways:

- Have one file group with all the data files

- Work with multiple file groups and multiple files

In option number one you’ll have a single file group (the PRIMARY file group) that holds all the files. SQL Server will spread out the data over all the files in that file group equally. In option number 2 you can make one or more file groups containing at least one data file which you can specifically move tables.

Because I’m not 100% familiar with the performance per table and I didn’t have enough time to investigate this, I chose to use the PRIMARY filegroup and create several data files. I wanted to spread out the data over multiple data files because I wanted the original file to be emptied and the new files to be filled. This was not a one-stop-go action because I had to face several issues:

- When the data from the original file is moved to other files, the original data file maintains the original size.

- Because of issue 1 I had to create more space. I didn’t have the space on the original server and had to place the data elsewhere temporarily.

- Placing the files on another server or share isn’t possible because SQL Server doesn’t allow you to place database files on file shares

- Attaching an extra disk wasn’t possible in a timely fashion

Solution

To solve the issue that SQL Server doesn’t allow database files to be placed on file shares I enabled trace 1807. This trace bypasses several checks of SQL Server from where it’s possible to place the files on file shares. See the code below:

DBCC TRACEON(1807)

The information about the trace flag was found in Varun Dhawan’s blog post which can be found here .

I created a file share on another server which pointed and gave the SQL Server service account modify rights on that share on NTFS and full control of the share. I then created 10 data files to make sure the data files get a size of around 35 GB each which is easy to handle.

TIP: Make sure the growth of the new data files is set to at least 1 GB because the continuous growth will hurt the performance of the data move._ To empty the original data file

I used the following script:

USE [dbname]

DBCC SHRINKFILE('datafilename', EMPTYFILE)

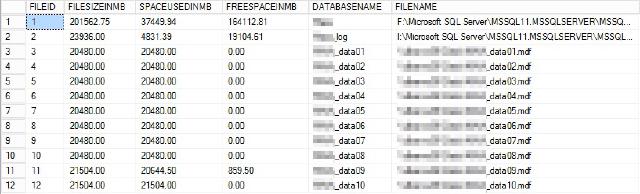

This code will run for hours! Make sure there is enough space for the new data files to grow otherwise the process will exit. If the process in some way quits just run the shrinkfile command again and SQL Server will continue the move of data. I used the script below to see how the files got filled:

SELECT a.fileid,

CONVERT(DECIMAL(12, 2), Round(a.size / 128.000, 2)) AS

[FILESIZEINMB],

CONVERT(DECIMAL(12, 2),

Round(Fileproperty(a.NAME, 'SpaceUsed') / 128.000, 2))

AS [SPACEUSEDINMB],

CONVERT(DECIMAL(12, 2), Round(

( a.size - Fileproperty(a.NAME, 'SpaceUsed') ) / 128.000, 2)) AS

[FREESPACEINMB],

a.NAME AS

[DATABASENAME],

a.filename AS

[FILENAME]

FROM dbo.sysfiles a

This return the following result:

As you can see the new data files are filled proportionally with the data from the original data file. The transaction log file is begin used because the data goes through the transaction log file and then gets written to the new data files.

At the same time, the free space in the original data files increases. When the process finished I was still having a large data file with a lot of space available. Because this was the primary file that contains all the system objects for the database I first shrunk the primary data file with the following command:

USE [dbname]

GO

DBCC SHRINKFILE (N'datafile' , 0, TRUNCATEONLY)

GO

Because the file didn’t bother me I adjusted the initial size of the data file to be very small like 100MB and set it to have a maximum size of 100MB:

USE [dbname]

GO

DBCC SHRINKFILE (N'datafile' , 100)

GO

USE [master]

GO

ALTER DATABASE [dbname] MODIFY FILE ( NAME = N'datafile', MAXSIZE = 100MB , FILEGROWTH = 1MB )

GO

This will make sure the primary data file will never grow again and all the data that gets written will be written to the new data files. Although the database works I wouldn’t want the files to be like they were spread across multiple locations. I detached the database to be able to move the data files around

USE [master]

GO

ALTER DATABASE [dbname] SET SINGLE_USER WITH ROLLBACK IMMEDIATE

GO

USE [master]

GO

EXEC master.dbo.sp_detach_db @dbname = N'dbname'

GO

… then moved the files from the file share to the database server data directory and attached the database again where I needed to look up the new data files because originally they were placed in an alternate location.

At last, I had to clean up the changes I made by removing the file share and undoing the trace. Undoing the trace is done with the following command

DBCC TRACEOFF(1807)

Conclusion

The process took a very long time. In this situation, it took me more than 17 hours to get the database configured and to get the data moved. Besides the fact that this took a very long time, I’m glad about the result.

The next step could be to identify possible performance killer tables that can be placed in separate files on other file groups but that is a whole different article altogether. I hope you can use this information for your actions and comments are always welcome!